A new image recognition algorithm uses the way humans see things for inspiration.

The context: When humans look at a new image of something, we identify what it is based on a collection of recognizable features. We might identify the species of a bird, for example, by the contour of its beak, the colors of its plume, and the shape of its feet. A neural network, however, simply looks for pixel patterns across the entire image without discriminating between the actual bird and its background. This makes the neural network more vulnerable to mistakes and makes it harder for humans to diagnose them.

How it works: Rather than train the neural network on full images of birds, researchers from Duke University and MIT Lincoln Laboratory trained it to recognize the different features instead: the beak and head shape of every species and the coloration of their feathers. Presented with a new image of a bird, the algorithm then searches for those recognizable features and makes predictions about which species they belong to. It uses the cumulative evidence to make a final decision.

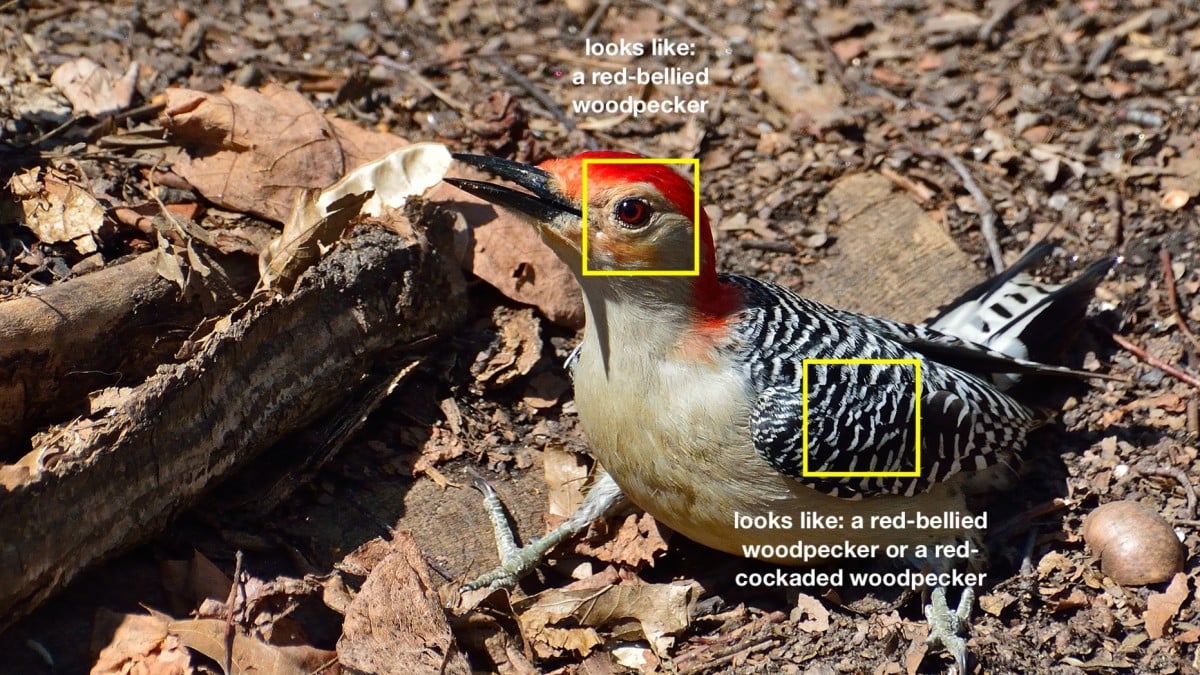

An example: For a picture of a red-bellied woodpecker, the algorithm might find two recognizable features that it’s been trained on: the black-and-white pattern of its feathers and the red coloring of its head. The first feature could match with two possible bird species: the red-bellied or the red-cockaded woodpecker. But the second feature would match best with the former.

From the two pieces of evidence, the algorithm then reasons that the picture is more likely of the former. It then displays the pictures of the features it found to explain to a human how it came to its decision.

Why it matters: In order for image recognition algorithms to be more useful in high-stakes environments such as hospitals, where they might help a doctor classify a tumor, they need to be able to explain how they arrived at their conclusion in a human-understandable way. Not only is it important for humans to trust them, but it also helps humans more easily identify when the logic is wrong.

Through testing, the researchers also demonstrated that incorporating this interpretability into their algorithm didn’t hurt its accuracy. On both the bird species identification task and a car model identification task, they found that their method neared—and in some cases exceeded—state-of-the-art results achieved by non-interpretable algorithms.

An abridged version of this story originally appeared in our AI newsletter The Algorithm. To have it directly delivered to your inbox, subscribe here for free.

![Buildbox Free - How To Make 2D Platformer Game [PART 1]](https://www.danielparente.net/info/uploads/sites/3/2020/01/Buildbox-Free-How-To-Make-2D-Platformer-Game-PART-150x150.jpg)