- Researchers at MIT and IBM have teamed up to create a repository of images that challenge the weaknesses inherent in computer vision systems.

- Part of the problem with machine learning is that it has no basis of knowledge to fall back on, as a human does, when an object is taken out of context

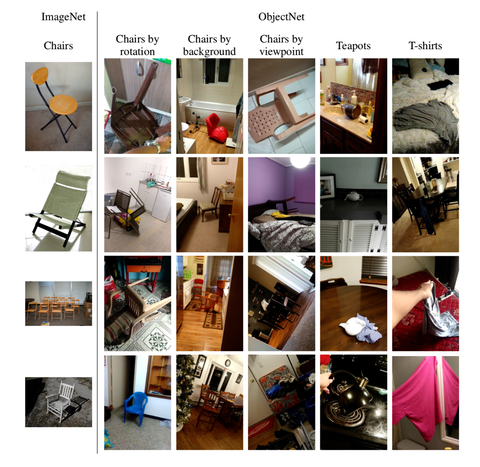

- Called ObjectNet, the set of images present new challenges for existing machine learning systems. The researchers hope that the engineers building computer vision algorithms will use the repository to better their systems.

If an apple falls from a tree 10 million times in videos shown to a machine learning algorithm, when the system sees an apple again, it’s going to predict the apple’s inevitable plunge. But not every apple is part of a Newtonian experiment, but machine learning doesn’t know that outside of its very limited context.

This lack of situational awareness is a big problem with machine learning. Left to rely on just its training data, machine learning can be kind of dumb, unable to discern common objects in uncommon location, like a hammer on a bed (pictured above) instead of a workbench.

To improve machine learning’s IQ, a team of Massachusetts Institute of Technology and IBM researchers are making public a whole database of imperfect test photos that seek to challenge existing systems. It’s called ObjectNet, and the primary goal is to urge technologists in the direction of new image recognition solutions.

Perfectly Imperfect

ObjectNet—a play on ImageNet, a crowdsourced photo database built for AI research—contains over 50,000 “image tests,” which the researchers are careful not to call training data.

ObjectNet isn’t meant to be a repository for training data to teach object recognition. Instead, these images help train up situational awareness for algorithms, like recognizing a pair of oven mitts if placed on the floor rather than a kitchen table.

This situational disconnect comes from simple sample bias. If you want an autonomous vehicle to operate in both good weather and bad, but only train computer vision software with images of sunny days, the car will basically have a technological spasm when it sees snow. The same is true of most machine-learning algorithms.

“Most scientific experiments have controls, confounds which are removed from the data, to ensure that subjects cannot perform a task by exploiting trivial correlations in the data,” the MIT and IBM researchers wrote on the ObjectNet website. “Historically, large machine learning and computer vision datasets have lacked such controls. This has resulted in models that must be fine-tuned for new datasets and perform better on datasets than in real-world applications.”

The team noted that when object detection systems were tested against the ObjectNet images, they showed a 40 to 45 percent decrease in performance due to sample bias.

Moving Beyond Cats and Dogs

As recently as ten years ago, computer vision researchers thought it would be nearly impossible to get a machine to tell the difference between a cat and a dog, but now it can be done with over 99 percent accuracy. That’s according to Joseph Redmon, a graduate student at the University of Washington who manages the Darknet Neural Network Framework, a system that tests image recognition software.

In an August 2017 TED Talk, Redmon illustrated the weaknesses in machine learning algorithms in two photos. The first photo was a simple image of Redmon’s Alaskan Malamute, and the system correctly identified the dog and even classified its breed. In a second image, which contained a Malamute but also a cat rolling around on a computer chair, the software only recognized the dog, ignoring the cat completely.

In another example, the world’s first universal LEGO brick sorter uses a convolutional neural network—a deep learning algorithm that takes an image input, assigns importance to it, and then classifies characteristics from one another—to create software that could discern between all 3,000 types of bricks in the Lego catalogue.

However, he quickly realized that the system would go bonkers if he trained it on 3D images of the bricks from an online database, but the real-world versions didn’t look the same. So, he used an extremely bright light source to standardize the light, shadow, and angles of the pieces coming down the sorter’s belt.

So the machines are smart, but with a lot of hand holding.

A Better Education

Context is pretty much everything when it comes to object detection, Raul Bravo, president and CEO of the 3D imaging startup Outsight, tells Popular Mechanics, and if you mess with that context, your machine learning algorithm’s ability to identify objects is going to pale in comparison to that of a toddler’s.

So Outsight is developing a system to improve 3D object detection without machine learning. The idea is to use both high-powered lasers and better software to get better results because machine learning, alone, is not strong enough or robust enough to rely on.

“[It] can’t deal with the complexity of the situations that [it’s] faced with,” Bravo said. “In our experience, there was some other way of thinking that’s required.”

The first prong in Outsight’s approach is a solid-state laser, which is used to identify objects. It’s different from the typical lasers used in perception systems, like lidar in self-driving cars. That’s because it works in wavelengths far from the visible spectrum, so it can’t harm humans.

The laser can send a band of thousands of different colors, simultaneously, so that when the light hits a physical object, the wavelengths that are returned can be identified as very specific types of material, whether plastic, metal, or cotton. That could help autonomous vehicles to see pedestrians versus animals and gives computers another dimension of comprehension in sensing, Bravo says.

If you can remove that limitation, it’s possible to help the computer “see” more kinds of objects in new contexts, creating a new kind of machine that could begin to rival human awareness—or, at the very least, a human toddler.

![Buildbox Free - How To Make 2D Platformer Game [PART 1]](https://www.danielparente.net/info/uploads/sites/3/2020/01/Buildbox-Free-How-To-Make-2D-Platformer-Game-PART-150x150.jpg)