The state of data science & maching learning. Kaggle https://www.kaggle.com/surveys/2017 (2017).

Friedman, J., Hastie, T. & Tibshirani, R. The Elements of Statistical Learning Vol. 1 (Springer Series in Statistics, Springer, 2001).

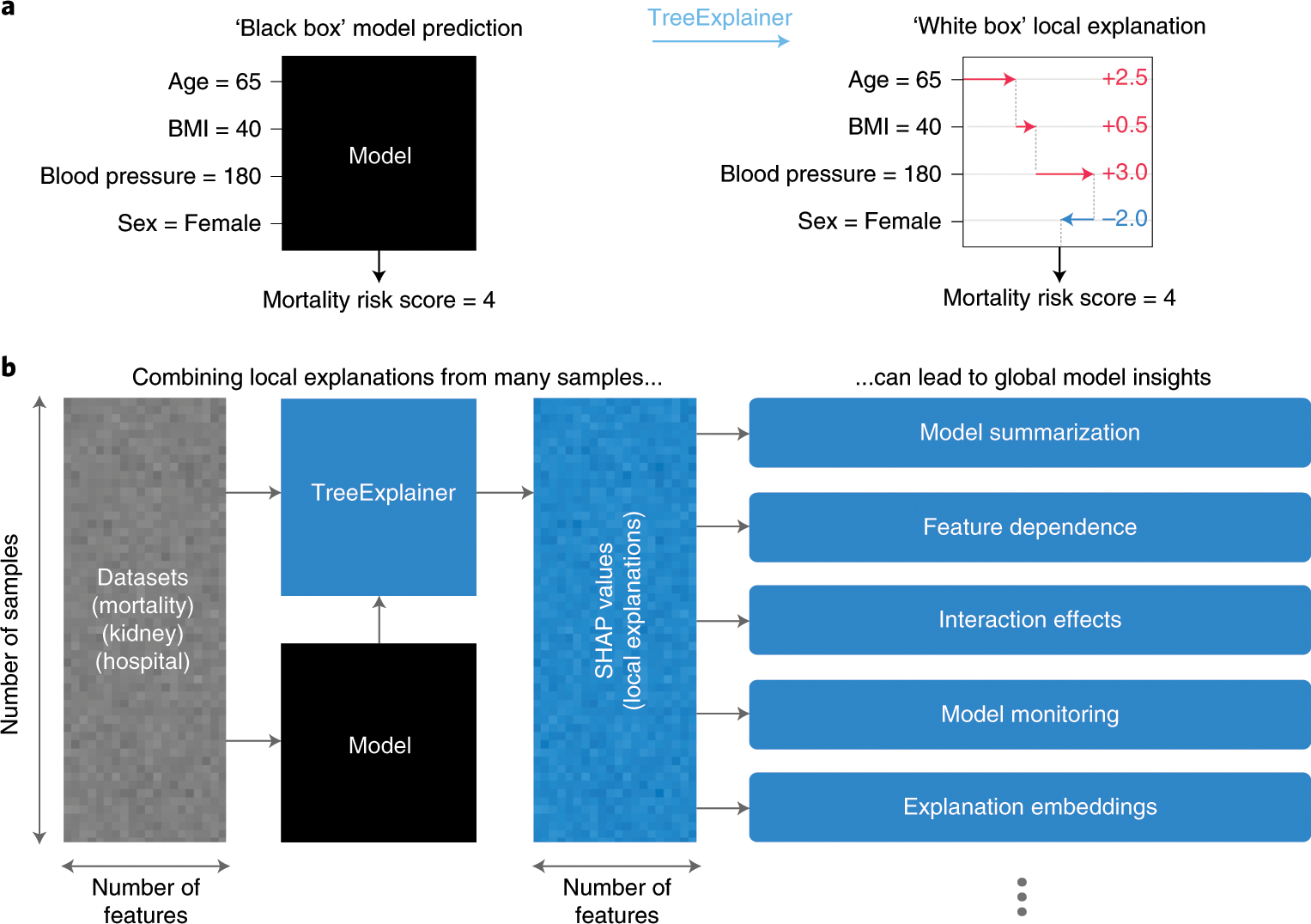

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 30, 4768–4777 (2017).

Saabas, A. treeinterpreter python package. GitHub https://github.com/andosa/treeinterpreter (2019).

Ribeiro, M. T., Singh, S. & Guestrin, C. Why should i trust you?: Explaining the predictions of any classifier. In Proc. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 1135–1144 (ACM, 2016).

Datta, A., Sen, S. & Zick, Y. Algorithmic transparency via quantitative input influence: theory and experiments with learning systems. In Proc. 2016 IEEE Symposium on Security and Privacy (SP), 598–617 (IEEE, 2016).

Štrumbelj, E. & Kononenko, I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 41, 647–665 (2014).

Baehrens, D. et al. How to explain individual classification decisions. J. Mach. Learn. Res. 11, 1803–1831 (2010).

Shapley, L. S. A value for n-person games. Contrib. Theor. Games 2, 307–317 (1953).

Sundararajan, M. & Najmi, A. The many Shapley values for model explanation. Preprint at https://arxiv.org/abs/1908.08474 (2019).

Janzing, D., Minorics, L. & Blöbaum, P. Feature relevance quantification in explainable AI: a causality problem. Preprint at https://arxiv.org/abs/1910.13413 (2019).

Matsui, Y. & Matsui, T. NP-completeness for calculating power indices of weighted majority games. Theor. Comput. Sci. 263, 305–310 (2001).

Fujimoto, K., Kojadinovic, I. & Marichal, J.-L. Axiomatic characterizations of probabilistic and cardinal-probabilistic interaction indices. Games Econ. Behav. 55, 72–99 (2006).

Ribeiro, M. T., Singh, S. & Guestrin, C. Anchors: high-precision model-agnostic explanations. In Proc. AAAI Conference on Artificial Intelligence (2018).

Shortliffe, E. H. & Sepúlveda, M. J. Clinical decision support in the era of artificial intelligence. JAMA 320, 2199–2200 (2018).

Lundberg, S. M. et al. Explainable machine learning predictions to help anesthesiologists prevent hypoxemia during surgery. Nat. Biomed. Eng. 2, 749–760 (2018).

Cox, C. S. et al. Plan and operation of the NHANES I Epidemiologic Followup Study, 1992. Vital Health Stat. 35, 1–231 (1997).

Chen, T. & Guestrin, C. Xgboost: a scalable tree boosting system. In Proc. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 785–794 (ACM, 2016).

Haufe, S. et al. On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage 87, 96–110 (2014).

Kim, B. et al. Interpretability beyond feature attribution: quantitative testing with concept activation vectors (TCAV). In International Conference on Machine Learning (ICLR, 2018).

Yosinski, J., Clune, J., Nguyen, A., Fuchs, T. & Lipson, H. Understanding neural networks through deep visualization. In ICML Deep Learning Workshop (ICML, 2015).

Bau, D., Zhou, B., Khosla, A., Oliva, A. & Torralba, A. Network dissection: quantifying interpretability of deep visual representations. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 6541–6549 (IEEE, 2017).

Leino, K., Sen, S., Datta, A., Fredrikson, M. & Li, L. Influence-directed explanations for deep convolutional networks. In Proc. 2018 IEEE International Test Conference (ITC) 1–8 (IEEE, 2018).

Group, S. R. A randomized trial of intensive versus standard blood-pressure control. N. Engl. J. Med. 373, 2103–2116 (2015).

Mozaffarian, D. et al. Heart disease and stroke statistics-2016 update a report from the American Heart Association. Circulation 133, e38–e48 (2016).

Bowe, B., Xie, Y., Xian, H., Li, T. & Al-Aly, Z. Association between monocyte count and risk of incident CKD and progression to ESRD. Clin. J. Am. Soc. Nephrol. 12, 603–613 (2017).

Fan, F., Jia, J., Li, J., Huo, Y. & Zhang, Y. White blood cell count predicts the odds of kidney function decline in a Chinese community-based population. BMC Nephrol. 18, 190 (2017).

Zinkevich, M. Rules of machine learning: best practices for ML engineering (2017).

van Rooden, S. M. et al. The identification of Parkinson’s disease subtypes using cluster analysis: a systematic review. Mov. Disord. 25, 969–978 (2010).

Sørlie, T. et al. Repeated observation of breast tumor subtypes in independent gene expression data sets. Proc. Natl Acad. Sci. USA 100, 8418–8423 (2003).

Lapuschkin, S. et al. Unmasking clever hans predictors and assessing what machines really learn. Nat. Commun. 10, 1096 (2019).

Pfungst, O. Clever Hans: (the Horse of Mr. Von Osten.) A Contribution to Experimental Animal and Human Psychology (Holt, Rinehart and Winston, 1911).

Machine Learning Recommendations for Policymakers (IIF, 2019); https://www.iif.com/Publications/ID/3574/Machine-Learning-Recommendations-for-Policymakers

Deeks, A. The judicial demand for explainable artificial intelligence. (2019).

Plumb, G., Molitor, D. & Talwalkar, A. S. Model agnostic supervised local explanations. Adv. Neural Inf. Process. Syst. 31, 2520–2529 (2018).

Young, H. P. Monotonic solutions of cooperative games. Int. J. Game Theor. 14, 65–72 (1985).

Ancona, M., Ceolini, E., Oztireli, C. & Gross, M. Towards better understanding of gradient-based attribution methods for deep neural networks. In Proc. 6th International Conference on Learning Representations (ICLR 2018) (2018).

Hooker, S., Erhan, D., Kindermans, P.-J. & Kim, B. A benchmark for interpretability methods in deep neural networks. In Conference on Neural Information Processing Systems (NIPS, 2019).

Shrikumar, A., Greenside, P., Shcherbina, A. & Kundaje, A. Not just a black box: learning important features through propagating activation differences. Preprint at https://arxiv.org/abs/1605.01713 (2016).

Lunetta, K. L., Hayward, L. B., Segal, J. & Van Eerdewegh, P. Screening large-scale association study data: exploiting interactions using random forests. BMC Genet. 5, 32 (2004).

Jiang, R., Tang, W., Wu, X. & Fu, W. A random forest approach to the detection of epistatic interactions in case-control studies. BMC Bioinformatics 10, S65 (2009).

![Buildbox Free - How To Make 2D Platformer Game [PART 1]](https://www.danielparente.net/info/uploads/sites/3/2020/01/Buildbox-Free-How-To-Make-2D-Platformer-Game-PART-150x150.jpg)